Recently our team has been tasked to write a very fast cache service. The goal was pretty clear but possible to achieve in many ways. Finally we decided to try something new and implement the service in Go. We have described how we did it and what values come from that.

Research and publish the best content.

Get Started for FREE

Sign up with Facebook Sign up with X

I don't have a Facebook or a X account

Already have an account: Login

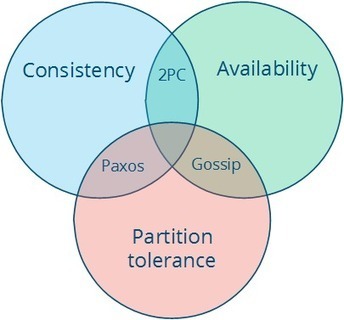

distributed architectures, big data, elasticsearch, hadoop, hive, cassandra, riak, redis, hazelcast, paxos, p2p, high scalability, distributed databases, among other things...

Curated by

Nico

Your new post is loading... Your new post is loading...

Your new post is loading... Your new post is loading...

No comment yet.

Sign up to comment

|

|